Unveiling the Next Frontier in AI Hardware: Biological Computing with Lab-Grown Neurons

Artificial intelligence (AI) hardware is undergoing a revolutionary shift with biological computing, where lab-grown neurons are integrated with traditional processors. This innovation could surpass Graphics Processing Units (GPUs) from Nvidia, AMD, and Sima.ai in efficiency and adaptability.

Two companies—Biological Black Box (BBB) and Cortical Labs—are leading this frontier, developing brain-inspired AI chips that could redefine the future of AI model training, computer vision, and energy-efficient deep learning.

How Biological AI Chips Work

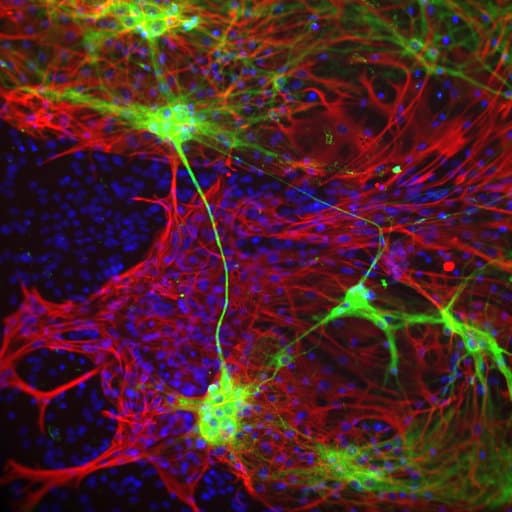

Biological computing integrates lab-grown neurons, derived from human stem cells or rat neurons, into computing systems. These networks function as self-learning processors, dynamically forming new connections to optimize performance.

Unlike Nvidia GPUs, which require frequent updates, biological chips adapt in real time, making them ideal for AI applications that demand continuous learning. Their low energy consumption also makes them a sustainable solution for high-performance computing.

Biological Black Box (BBB) and the Bionode Platform

BBB’s Breakthrough in AI Processing

Baltimore-based startup Biological Black Box (BBB) has unveiled its Bionode platform, a hybrid computing system integrating living neurons with traditional processors. This innovation positions BBB as a leading player in the next generation of AI hardware.

Features and Benefits of Bionode

- Energy Efficiency – Uses less power than GPUs, reducing operational costs.

- Faster AI Model Training – Enhances inference speed and classification accuracy.

- Adaptability – Eliminates manual retraining, allowing continuous learning.

- Hybrid Computing Approach – Works alongside Nvidia GPUs rather than replacing them.

Applications of BBB’s Bionode

- Computer Vision: Enhances object recognition with reduced GPU dependency.

- Large Language Models (LLMs): Improves natural language processing (NLP) efficiency.

- Energy-Efficient AI Training: Lowers the power consumption of AI workloads.

As AI hardware continues to evolve, biological computing could be the key to scalable, low-power AI processing. Learn more about BBB’s Bionode platform at TechCrunch and how it compares to traditional AI chips.

Cortical Labs’ CL1: The First Commercial Brain-Powered Computer

What Is CL1?

Cortical Labs has introduced CL1, a biological computer powered by lab-grown human neurons. Unlike traditional silicon-based AI chips, CL1 leverages living brain cells to process data, learn patterns, and enhance artificial intelligence performance.

The company offers “Wetware-as-a-Service”, allowing developers and researchers to rent bio-computing power via the cloud, eliminating the need for costly hardware investments.

How CL1 Works

The CL1 bio-computer is built using neurons cultivated in a lab and placed on a silicon chip equipped with electrode arrays. This setup allows neurons to:

- Communicate via electrical signals, mimicking human brain activity.

- Interact with a Biological Intelligence Operating System (biOS) to interpret and process data.

- Optimize AI algorithms dynamically, reducing the need for constant retraining.

By leveraging real-time adaptability, CL1 processes information more efficiently than traditional Graphics Processing Units (GPUs), offering a glimpse into the future of hybrid computing.

Applications of CL1

Cortical Labs’ biological AI technology has multiple high-impact applications:

Deep Learning Acceleration – CL1 trains AI models faster while consuming less energy than GPUs.

Drug Discovery & Disease Modeling – By analyzing neural interactions, researchers gain insights into neurodegenerative diseases.

Gaming AI – CL1 has demonstrated self-learning capabilities by mastering Pong in minutes, showing promise for adaptive AI gaming.

Commercial Availability

- Price: The CL1 biological AI chip costs $35,000 per unit.

- Cloud Access: Developers can rent bio-computing power via a subscription-based model.

Biological AI vs. Silicon-Based GPUs: Key Differences

AI hardware is evolving beyond silicon-based computing, and biological AI chips offer distinct advantages over traditional GPUs. Here’s a side-by-side comparison:

| Feature | Biological AI (Neurons) | Traditional GPUs (Silicon-Based) |

|---|---|---|

| Power Consumption | Extremely low | Very high |

| Processing Adaptability | Self-learning, real-time adaptation | Requires manual retraining |

| Hardware Lifespan | Neurons remain functional for 12+ months | Transistors degrade over time |

| Scalability | Modular & expandable | Expensive, energy-intensive scaling |

Biological AI systems learn, adapt, and process data like a human brain, whereas GPUs follow fixed computational patterns. This biological advantage could redefine the future of AI model training and deployment.

External Source: MIT Technology Review

The Future of AI: Hybrid Computing with Biological, Silicon, and Quantum Chips What’s Next for AI Hardware?

The evolution of AI hardware is shifting toward hybrid computing models, where silicon, biological, and quantum computing coexist. This modular approach allows AI systems to:

- Leverage biological neurons for low-power, real-time adaptation.

- Utilize silicon GPUs for high-speed parallel processing.

- Incorporate quantum computing for complex problem-solving at scale.

As AI and neuroscience continue to merge, researchers are working on human-brain-inspired AI models, enhancing machine intelligence with biological efficiency.

Key Takeaways

Biological computing is no longer theoretical—commercial products are available today.

AI chips using living neurons could replace GPUs in select tasks, reducing costs and energy consumption.

Ethical concerns remain, but leading companies are collaborating with bioethicists and regulatory bodies to ensure responsible AI development.

Final Thoughts

Biological AI is reshaping the future of computing, providing an energy-efficient, self-learning alternative to traditional GPUs. Companies like BBB and Cortical Labs are at the forefront of this transformation, proving that AI does not have to rely solely on transistors.

Would you trust an AI chip powered by human brain cells? Share your thoughts below.

Post Comment